For the purposes of this paper, the “edge” is defined as the network entry points or data sources that are in the field on the opposite end of the network from the centralized host. In networking terms, an edge device provides an entry point into enterprise or service provider core networks.

The Importance of the Edge for the Industrial Internet of Things in the Energy Industry

Stephen Sponseller | Kepware

It is clear that the Industrial Internet of Things (IIoT) has arrived—and is here to stay. Today’s IIoT is perhaps best defined by interconnectivity. This enables better monitoring, information gathering, role-based information presentation, and operator situational awareness—and ultimately leads to improved decision-making, increased optimization, improved safety, and lowered costs.

The Energy Industry in particular is well-positioned to take advantage of the IIoT. The industrialized world needs energy, and the demand for hydrocarbons will not diminish in our lifetimes. But with the price of oil hovering around $50/barrel, companies need to reduce costs to be profitable. A recent MIT Sloan School of Management case study1 estimated that an unproductive day in a liquefied natural gas (LNG) facility could cost as much as $25 million. It also estimated that this happens an average of five times a year, costing a company $125 million per year per facility in unplanned downtime.

The IIoT could very well solve this problem, as it provides enterprise-wide visibility into operations. For example, by implementing today’s analytics and machine learning technologies, companies can detect and alert process and equipment anomalies to proactively prevent unexpected downtime or out-ofspec products—decreasing or eliminating those $125 million per year costs. In addition, more producers are trying to seamlessly connect and share data in real-time across many different enterprise systems—from geoscience to accounting to marketing to compliance—as well as improve visibility of SCADA and measurement data from the field. All areas of the industry want to increase efficiencies and switch from preventative maintenance to less costly predictive- and conditionbased maintenance.

Reports by Gartner and Cisco estimate that there will be 25 to 50 billion connected devices by 2020, compared to the 4.9 billion that were estimated to be connected in 20152. That is a significant increase, and while not all the devices will be industrial versus consumer, the report indicates that the majority of the increase will be from the industrial sector.

This increase will likely come from a combination of new smart connected products and sensors and gateways outfitted for existing legacy equipment. Since industrial equipment is typically designed to last for 20 to 30 years, the majority of connected devices for the foreseeable future will be legacy equipment already operating in the plant or field. The types of sensors being retrofitted to these machines include air quality, temperature, pressure, vibration, energy usage, proximity, accelerometer, and more. Devices sometimes referred to as “IoT gateways” are also coming to market, providing the ability to collect data from these sensors and send it back to the enterprise.

The Challenges of the IIoT

Despite the potential benefits of the IIoT, concerns with implementation still remain. In today’s world of cyber attacks, security is of course always at the forefront of any new technology discussion. The Energy Industry is already a target because of the volatile nature of its products and the catastrophic impact that cyber attacks could have on infrastructure—and society at large. Many of the protocols that are used today for industrial communications are not secure; some were specifically stripped down and designed for low bandwidth networks back in a time when security was not a concern. The influx of connected devices sending unsecure data—and companies opening up networks to the Internet without enhancing network security— has raised concerns on the threat of exploitation.

In addition, existing telemetry networks often have bandwidth limitations for remote assets. Energy companies are already struggling to support the number of connected SCADA devices across their networks today. For example, an industrial oil and gas operator could have 10,000 to 20,000 legacy devices located remotely across multiple production sites with limited connectivity, power, and network bandwidth. Depending on the device, there could be a couple thousand bits of data—or even gigabytes of data— being produced and updated in milliseconds. As more devices come online, data production increases exponentially, consuming new levels of required bandwidth and often leading to service degradation, data latency, and increased costs.

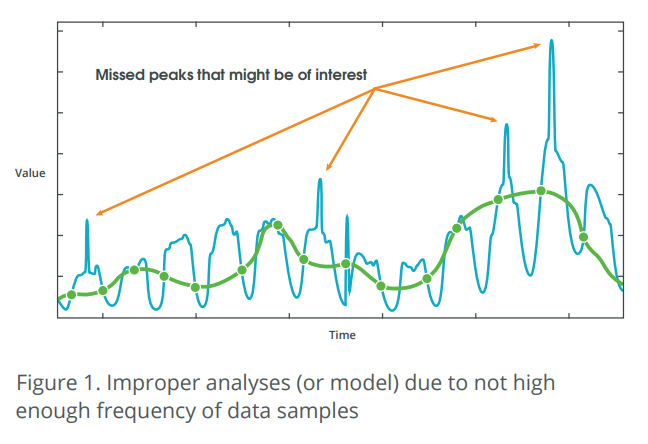

The need to collect higher frequency data for analytical purposes only increases the burden on the networks. The data scientist that uses the analytics and machine learning applications requires high frequency data in order to truly understand a process or machine. For example, while SCADA may have required 1 to 5 second data to monitor an asset, analytics applications might be looking for 10 to 50 millisecond data. Not sampling the data at a high enough frequency could result in a completely different analysis or modeled behavior—and potentially miss peaks that could be of significance.

For this amount of data to be available for analytics and machine learning applications, a significant increase of existing data communication networks is required. According to Dr. Satyam Priyadarshy, Chief Data Scientist of Halliburton Landmark:

The growing need to better understand the subsurface, have cost-effective drilling operations, and achieve highly efficient reservoir management has led to the creation of huge amounts of data. This data is coming from a large number of sensors, devices and equipment at higher frequencies than ever before, inundating existing information infrastructure. Yet, the oil and gas industry generates value from a mere 1% of all the data it creates, according to the McKinsey Report 2015, [CNBC 20154](4,5)

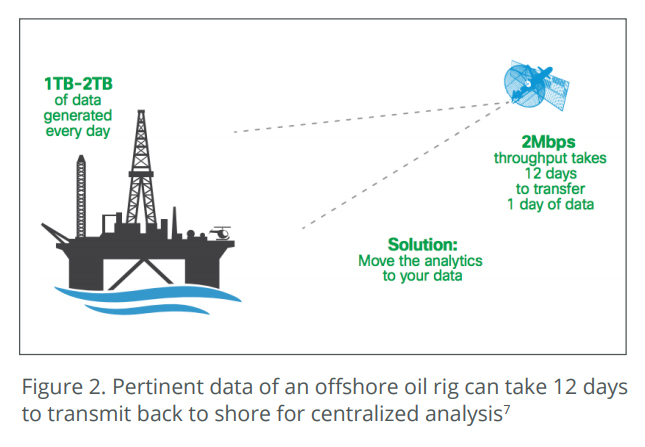

Network latency is also a concern. If data is not used immediately, its usefulness is all but lost. Sending data to the cloud (which could just mean from the field to the enterprise or centralized data processing center) for time-critical monitoring and analysis is not an applicable use of cloud computing. For example, Cisco7 estimates that an offshore oil platform generates between 1 TB and 2 TB of time-sensitive data per day related to platform production and drilling platform safety. With satellite (the most common offshore communication link), the data speeds range from 64 kbps to 2 Mbps. This results in 12 days to transmit one day’s worth of data back to a central site for processing—and 12 days is definitely not “real-time.” Latency such as this could have significant operational and safety implications.

Solving Challenges via Data Collection and Analytics at the Edge

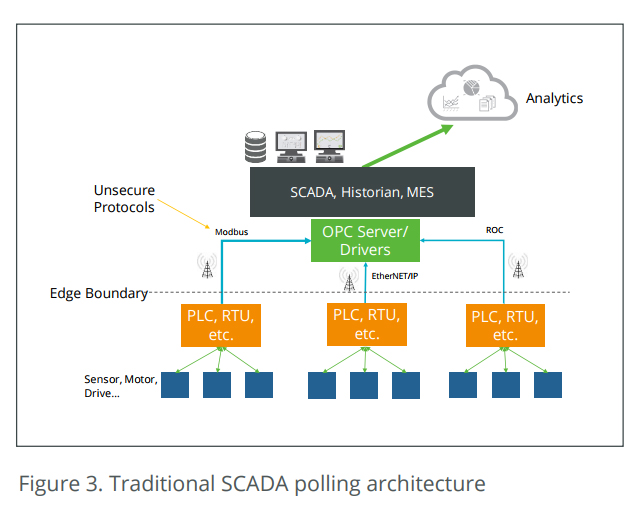

In the traditional SCADA data collection architecture, all data sources in the field are polled from a centralized host. This requires all raw data to be requested and provided across the network so that it can be stored, monitored, and analyzed back in the enterprise (SCADA, Historian, analytics, and so forth).

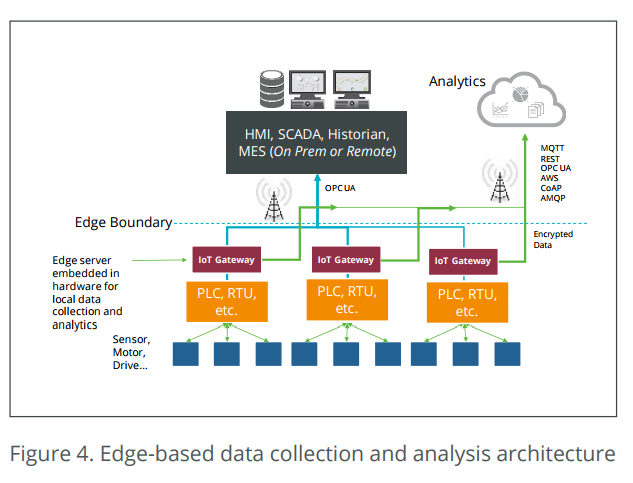

But industry leaders see pushing IIoT data collection— and some analytics—to “the edge” as a potential solution to alleviate network bandwidth limitations and security concerns. For the purposes of this paper, the “edge” is defined as the network entry points or data sources that are in the field on the opposite end of the network from the centralized host. In networking terms, an edge device provides an entry point into enterprise or service provider core networks. Examples include routers, routing switches, integrated access devices, multiplexers, and a variety of local area network (LAN) and wide area network (WAN) access devices. Devices and sensors built for the IIoT with access to the network are also considered edge devices (8).

Across the industry, price and form factors of processors keep decreasing, thus allowing unnecessary computing and data storage to be moved away from the centralized server where enterprise-level applications reside. This enables companies to distribute their computing to the edge of the network through low-cost gateways and industrial PCs that can host localized and task-specific actions in near real-time and transmit much less required data back to the enterprise. And this data can be transmitted with more modern protocols— such as MQTT, OPC UA, AMQP, and CoAP—designed for efficiency and security. Locating the edge gateway in the field and on-site and connecting it directly to the data sources helps alleviate the security concerns of communicating directly with the data sources in the field over a wide area network with an unsecure protocol.

Not all analysis and processing needs to happen at the edge to provide benefits, however. Simple analytics that would reduce data throughput include data consolidation and data reduction. For example, a simple data reduction could be publishing only data changes across the network. If the value of an endpoint has not changed, there is no need to publish that same value across the network. Deadbanding provides a way to only publish a new value if that value has changed outside an allowable range. Data consolidation allows data collection from the device at a faster rate locally, and then publishing all of the samples in a single request. Finally, encrypting the data for secure transmission is another example of relatively simple analytics and manipulation of the data at the edge. Of course, data transmission is not free—reducing the amount of data transmitted across the network is a potential cost savings benefit as well.

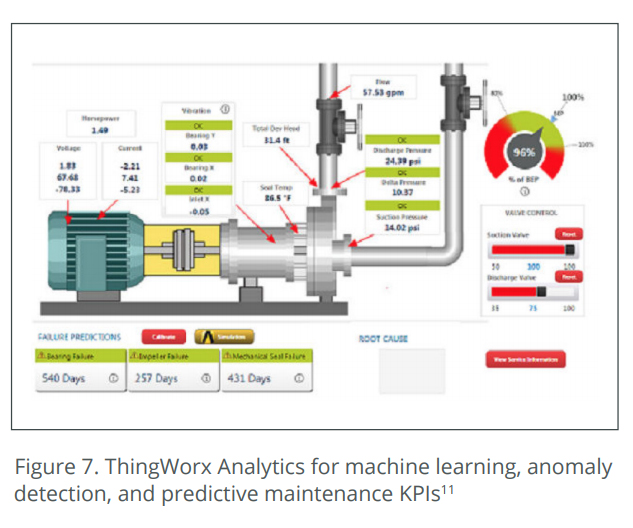

More advanced analytics can also be pushed to the edge. By storing the data locally, models can be developed and patterns recognized by analytic applications running on the local gateway or industrial PC. Descriptive analytics can turn data into more meaningful information. The KPIs to determine how a machine is running might be just a few data points that are computed from several different sensor readings, which could be done locally with just the KPI information transmitted from the edge to the enterprise.

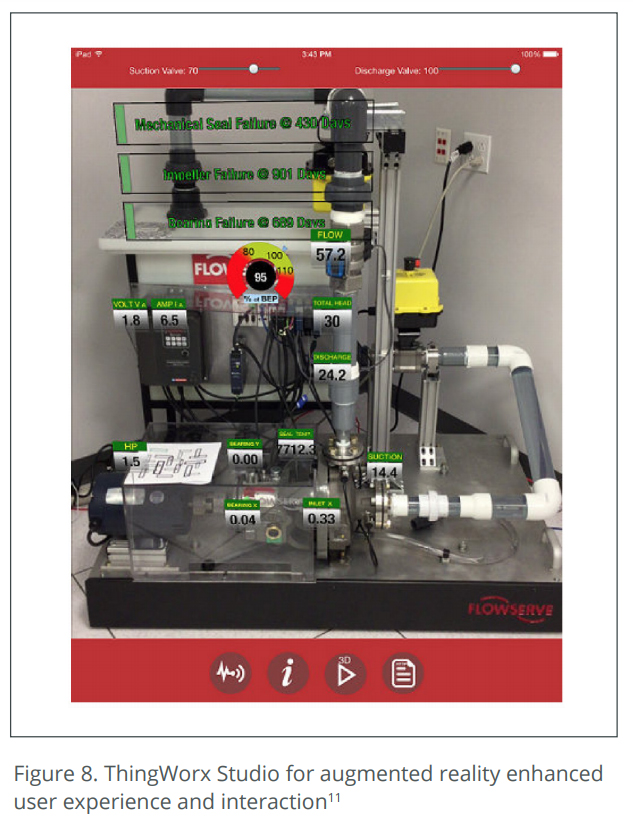

Even more advanced are predictive analytics on the edge, where machine learning techniques and applications can be applied to predict certain outcomes before they happen. This could be done locally at the edge with periodic updates on predicted outcomes, or alerts if undesirable trends are predicted. Prescriptive analytics, which use optimization and simulation algorithms to recommend changes to achieve a desired result or state, may or may not make sense to be performed at the edge, depending on the scenario. The ability to make decisions locally and quickly based on collected and analyzed data can significantly improve an organization’s efficiency and safety. Instead of performing centralized data analytics to determine what caused downtime—or even worse, a disaster— after it happens, the Energy Industry could see an undesired state or accident before it happens and prevent it entirely with real-time predictive analysis.

A large factor in how much analytics to push to the edge will be how much bandwidth is available in each setting. It might make sense to just do data reduction, consolidation, and encryption at the edge, and let the cloud or host do the majority of the enhanced analytics. In more challenging communication scenarios, more analytics are likely to get pushed to the edge. In cases where instant analysis and decisions need to be made, the more analytics performed (and decisions made) at the edge, the better.

Hybrid implementations (where some analytics are performed at the edge and some are performed in a centralized repository host like the cloud, enterprise, and so forth) are the likely first step for much of the industry. Raghuveeran Sowmyanarayanan, the Vice President at Accenture, says that:

Edge analytics will exist in addition to, but won’t replace, traditional big data analytics done in the enterprise data warehouse (EDW) or logical data warehouse (LDW). Data scientists will still process the majority of large, historical data sets for such purposes as price optimization and predictive analytics.(9)

“The Fog”

The “fog” is a term that Cisco coined to define intelligence computing that is pushed down to the local area network level of network architecture—processing data in a fog node or IoT gateway. In this network description, edge computing pushes the intelligence, processing power, and communication capabilities of an edge gateway or appliance directly into devices like Programmable Logic Controllers (PLCs) and Programmable Automation Controllers (PACs). When considering local data collection and analysis at an offshore rig, a wellsite in West Texas or North Dakota, or a wind farm on a mountain range in Colorado, it doesn’t matter if the analysis is performed in actual devices like PLCs or PACs or in edge gateways. The main concern is ensuring that the raw, unsecure data is not sent across large geographic distances through a potentially public network to a centralized site for analysis and action.

The Many Components of an Edge Solution

At a high level, this concept of edge-based data collection and analytics might appear simple, but the devil is in the details. There are many components of an edge architecture, and it is likely that the solution will include hardware and software from multiple vendors. In their January 2017 report on edge analytics,10 ABI Research stressed the importance of partnerships in accommodating edge solutions:

Partnerships remain critically important in forming strong edge solutions: the heterogeneity of machines, sensors, and connected equipment in a hybrid compute environment necessitates various actors and their solutions to work in harmony to maximize business value.

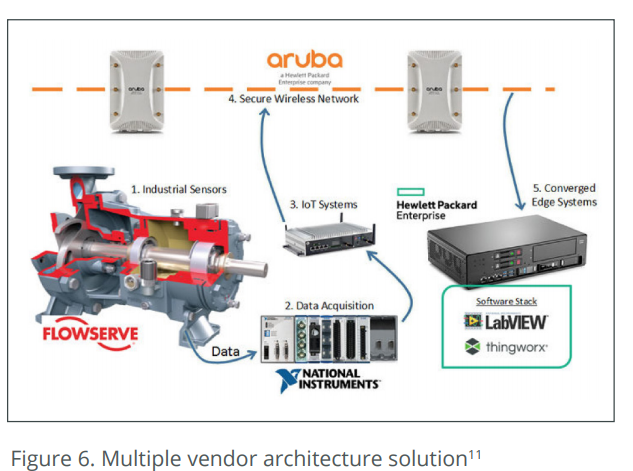

For example, Flowserve, Hewlett Packard Enterprise (HPE), National Instruments, and PTC collaborated to create an industrial condition monitoring solution that runs on the network edge in real-time.

As shown in Figure 6, sensors within the FlowServe pump capture various analog parameters like vibration, temperature, flow rate, voltage, and current. The National Instruments RIO data acquisition system converts the analog signals to digital and normalizes the data. An HPE Edgeline IoT system acquires the data locally and routes it through the local and secure wireless network provided by the Aruba access points. The HPE Converged Edge Systems device hosts ThingWorx (PTC) and LabView (National Instruments) software, which perform real-time data acquisition, analytics, condition monitoring, system control, and even augmented reality for enhanced user experience and equipment interaction. From there, the data can be transmitted to a centralized data center via secure wireless, wired, or wide area networks.

Communication Improvements

The bandwidth and latency of the industry’s communication networks are continuously improving. In 2005, Dr. Phil Edholm, Nortel’s Chief Technology Officer and Vice President of Network Architecture, noticed a relationship in communication bandwidth doubling approximately every two years(6). This theory is intact today: All industry communication technologies have close to doubled in throughput about every two years.

For example, in 1995 there were 900 MH license free radios that could transmit at baud rates from 1200 baud to 115 Kbaud. The data throughput restriction in the field was the RS 232 connectivity that ranged from 1,200 to 19.3 Kbaud through the 1990s and into 2000. Before 2010, IP devices were starting to spread through the field. From that point to today, port connectivity outpaced the wireless technology. Although we started seeing 1 Mbaud radios (a vast improvement) in 2015, the IP connectivity still outpaces radios’ throughput in bandwidth.

G5 technology, which promises 5 to 10 Gbps throughput, is supposed to be released at the end of 2017. Telecom companies have started discussions regarding private infrastructure technology for remote locations to support data cell modems capable of supporting industrial wireless for well pads and other remote site locations.

These communication improvements show the promise of the IIoT in the field. Communication has always been at the heart of SCADA, and SCADA professionals are experts at recognizing the potential of new technologies—and developing applications to adapt and enhance them to best suit their environment. So it is no surprise that when faced with the IIoT, the SCADA community asks, “How will all this wonderful IoT data flow?” As broadband and latency issues are resolved, telecom, automation, SCADA professionals, and others will see the clear benefits of the IIoT.

Conclusion

Edge-based data collection and analytics are solving some of the challenges presented by implementing the IIoT. Collecting data locally and directly from a data source in the field can reduce security concerns. Performing analytics—ranging from data reduction and consolidation to machine learning and condition-based monitoring—at the edge can reduce the required volume of data transmission across limited bandwidth networks and enable real-time analysis and decision-making for optimal operational excellence and safety. With vendors across the industry coming together to provide comprehensive solutions that make edge-based data collection and analysis possible, the Energy Industry will benefit from improved safety, efficiency, and productivity at every level across the enterprise.

Sources

1. Winig, Laura. “GE’s Big Bet on Data and Analytics,” http://sloanreview.mit.edu/case-study/ge-big-beton-data-and-analytics/

2. Biron, Joe and Jonathan Follett. “Foundational Elements of an IoT Solution,” 2016.

3. DeLand, Seth and Adam Filion. “Data-Driven Insights with MATLAB Analytics: An Energy Load Forecasting Case Study,” https://www.mathworks.com/company/ newsletters/articles/data-driven-insights-withmatlab-analytics-an-energy-load-forecasting-casestudy.html

4. Priyadarshy, Dr. Satyam. “What It Takes to Leverage E&P Big Data,” https://www.landmark.solutions/ Portals/0/LMSDocs/Whitepapers/What-It-Takes-ToLeverage-BigData.pdf

5. McKinsey Global Institute. “The Internet of things: Mapping the value beyond the hype,” 2015.

6. Cherry, Steven. “Edholm’s Law of Bandwidth,” http:// www.ece.northwestern.edu/~mh/MSIT/edholm.pdf

7. Cisco. “A New Reality for Oil & Gas,” http://www.cisco. com/c/dam/en_us/solutions/industries/energy/docs/ OilGasDigitalTransformationWhitePaper.pdf

8. Gorbach, Greg. “The Power of the Industrial Edge,” https://industrial-iot.com/2016/11/4006/

9. Sowmyanarayanan, Raghu. “Convergence of Big Data, IoE, and Edge Analytics,”” https://tdwi.org/articles/2016/01/26/big-data-ioe-and-edge-analytics. aspx

10. ABI Research. “Edge Analytics in IoT: Supplier and Market Analysis for Competitive Differentiation,” https://www.abiresearch.com/market-research/ product/1026092-edge-analytics-in-iot/

11. Hewlett Packard Enterprise. “Real-time Analysis and Condition Monitoring with Predictive Maintenance,” http://h20565.www2.hpe.com/hpsc/doc/public/ display?docId=c05336741

The content & opinions in this article are the author’s and do not necessarily represent the views of ManufacturingTomorrow

Comments (0)

This post does not have any comments. Be the first to leave a comment below.

Featured Product